Community Moderation

What is community moderation?

Moderation means different things to different people and a large part of an organisation’s approach to moderation is contingent on their understanding of what it is and what they are trying to achieve.

For most digital platforms, moderation refers to the practice of identifying and deleting content by applying a pre-determined set of rules and guidelines. RNW Media implements ‘community moderation’, which aims to encourage young people to engage in respectful dialogue, allowing everyone to have a voice. Careful strategic moderation of online conversations helps build trust among community members who then feel safe to express themselves freely. This, in turn, nurtures diverse and resilient communities with strong relationships among members.

- RNW Media wants to bring people together to engage with one another and with different points of view

- RNW Media wants young people to think critically about the challenges facing their society

- RNW Media wants to increase users’ knowledge, and in some cases, change their attitudes as a result of exposure to our platforms and communities. We therefore encourage a diversity of viewpoints.

- RNW Media creates that safe space where women are encouraged and feel safe to participate.

Moderation is a tool to build a vibrant, respectful and inclusive digital community. It is an important tool to communicate with our users and it allows us to bring marginalised groups into the conversations. We encourage users to actively participate in the conversation, create a safe space where young people feel not only able to take part but benefit from it.

From a more operational perspective moderation offers us the chance to set the tone of discussion on certain content, bring users back on topic if they go on a tangent, diffuse tense conversations, provide additional information or answer users’ questions, and propagate a healthy, respectful dialogue.

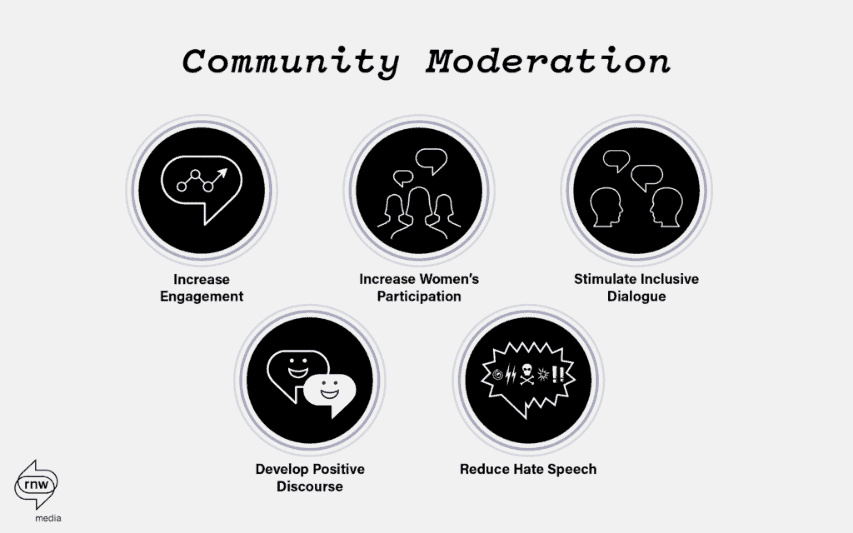

The goals of moderation

Online abuse and harassment can discourage people from discussing important issues or expressing themselves and mean they give up on seeking different opinions. Therefore, discussions on our platforms are moderated with the aim of ensuring they remain a safe place for discussion and debate.

The moderator

Moderating conversations, by participating in them, has considerable benefits: it is faster, more flexible and responsive; and it retains the principle of free and open public spaces for debate.

Moderators play an important role in encouraging users to engage with each other respectfully and in letting young people know that their opinion is valid, regardless of their political and socio-cultural affiliation, gender, tribe etc. By engaging with people who comment on posts, moderators validate that their opinion is important and by asking specific questions on posts and comments, moderators encourage young people to think critically and engage in constructive discussion.

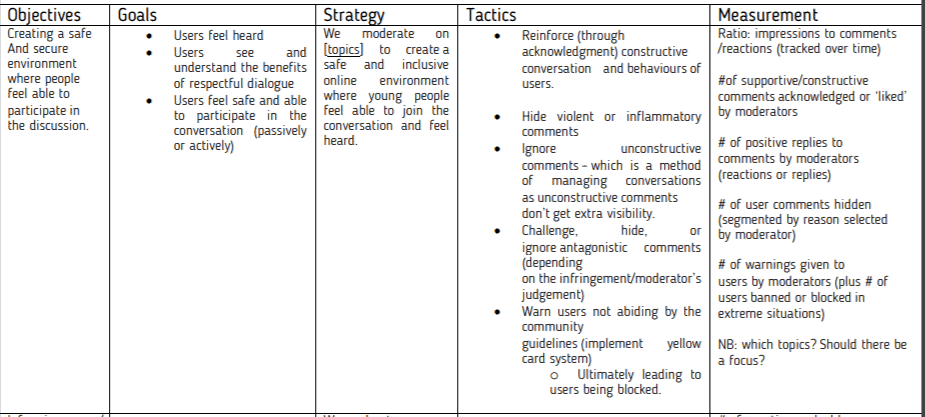

The moderators can use a grid framework to understand the impact of their work and assess the success of moderation in relation to objectives and in specific situations.

A snapshot from the grid used in the Citizens Voice programme, for example:

These tactics and measurements used cannot always be automated and followed all the time, but it can be developed to support moderators in their work, and should be re-thought and updated regularly.

The table below shows some example indicators of RNW Media’s indicator framework, that are related to the measurement of the impact of moderation.

MAIN INDICATOR | WHY DO WE MEASURE THIS? | SPECIFIC METRICS |

# of comments on website (by theme) | To see how many people actively share their thoughts in response to website articles and discussion board threads, and which content generates the most engagement. | # of comments on website articles per theme # of discussion board comments per theme |

# of engagements on social media (by theme) | To see how many times our social media content receives reactions (likes/emojis), comments or shares, and which content generates the most engagement. | # of reactions/likes per theme # of comments/replies per theme # of shares/reposts per theme |

# of moderator engagements with users | To show how our moderators engage with users, either through moderating comments, or responding to user questions in private messages, which tells us to what extent our platforms are considered to be a reliable source of information. | # of Discussion Board threads (by theme) # of user questions answered by country teams (by theme) # of moderator responses in discussions (by theme) |

# of interactions between users | To show how often users respond to each other rather than just to the content. | # of comments to comments on social media # of user comments to discussion board threads |

Moderation responsibilities

- Moderator’s should monitor social media regularly – at least once a day. Engagement keeps a community enthused. If not monitored though, sites and pages can quickly become filled with spam, offensive or negative comments that ultimately drive users away.

- It is important that moderators are available to manage the distribution of new content – particularly on norm topics – this is to set the tone, keep the discussion on topic and, ultimately, to help the conversation go further. They should work closely with community managers and marketeers and know when content will be published.

- Moderators enforce the community guidelines and ensure the community is safe and that users feel able to join the discussion.

- Moderators teach by example. Challenging unsavoury viewpoints and demonstrating how to have robust yet respectful conversations.

Selecting and applying moderation techniques

There are a few basic rules when moderating discussions:

- Always answer user questions.

- Contribute in respectful, non-judgemental way – but don’t be afraid to challenge users.

- Ignoring comments, i.e. not replying, is a form of moderation. Especially on social media, not replying to a comment automatically diminishes the potential reach of that comment.

- We never delete comments – and we only hide them if they are abusive, violent, or offensive.

- We focus our moderation on norm/core topics – although dealing with comments of an abusive, violent, or offensive nature are to be dealt with regardless.

- Violent, abusive, or offensive comments have no place on Citizens’ Voice communities – they should be immediately hidden, and private messages send to offenders.

- Spam should always be hidden, and repeat offenders blocked.

- There should be short and clear behaviour guidelines on the site/page, that you can direct users to.

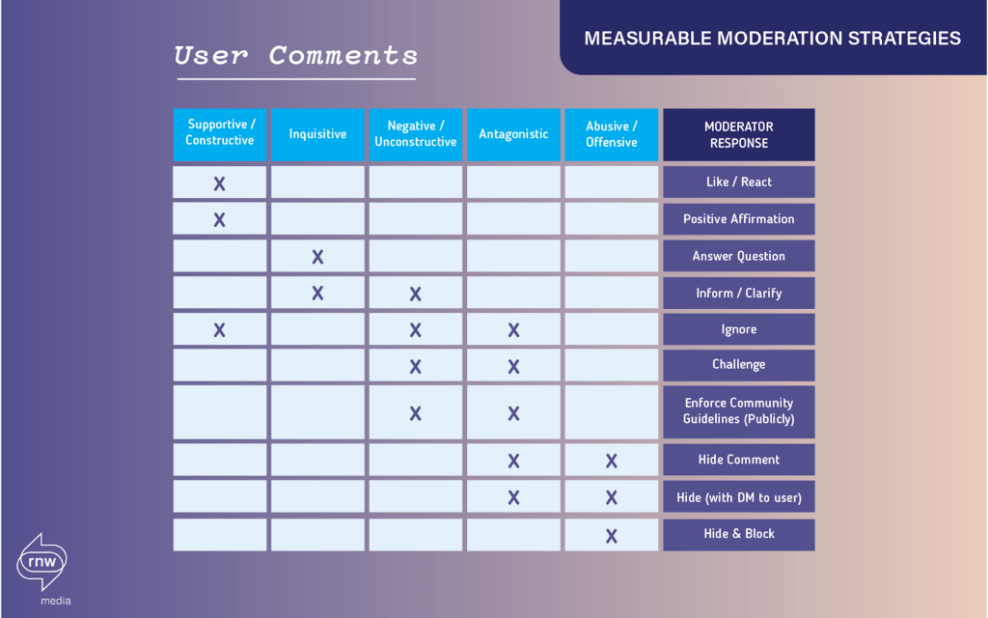

With these basic rules in mind, users’ comments and intentions can be categorised, based on the moderator’s judgement. Moderators don’t have to record and categorise all user comments, but when deciding how to respond, these descriptions below can guide:

- Supportive/constructive; a user responding to the page or another user with the intention of having a respectful discussion.

- Inquisitive; users asking a question, clarifying content, or requesting more information on the topic.

- Negative/unconstructive; negative response from a user to another user or the page – not really seeking deeper conversation. In the polarisation context, this would be monologuing

- Antagonistic; a user participating in the conversation but not with good intentions – really aiming to stir up discord.

- Abusive/offensive; this can be anything from threats of violence, hate speech, etc.

How to deal with negative or antagonistic comments

If a user is posting negative comments these should be treated with caution, but not removed. If a forum only has positive comments, genuine discussion is that this is not what we are trying to achieve. The moderator should monitor negative/unconstructive comments, however, not act unless it threatens to dominate the entire conversation, in which case you need to review why people are being so critical.

If negative commenting is inaccurate, then it is important to add content or additional information which resolves inaccuracies or adds an alternate view. Alternatively, the moderator can ask a negative commenter to elaborate their point. Antagonistic comments are usually the habitat of trolls. They are purposefully aiming to derail the conversation and prevent a meaningful discussion from taking place.

So, with negative or antagonistic comments, moderators have 3 options:

- Challenge (This could take the form of questioning the user, providing information/research to dispute their claims, or direct the user to the community guidelines)

- Ignore (Which is a method of managing conversations as unconstructive comments don’t get extra visibility)

- Hide (This should be applied for repeat offenders, at the moderator’s discretion, and should be accompanied by a private message)

- If a user becomes a nuisance on the page and is unwilling to follow community guidelines after warnings, consider banning them.

How to deal with abusive or offensive comments

Abusive comments include offensive, abusive, obscene or discriminatory comments, personal attack and incitements to violence. They should not be tolerated under any circumstances. If abusive or offensive comments are made, the moderator should hide the comments as soon as they are seen.

Depending on the moderator’s judgement they should message the user and either inform them this is not that kind of community or deliver a yellow card. If a user offends repeatedly, or it’s obvious they are a spammer, consider blocking them but be transparent and consistent. Never ban someone just for being critical or having a controversial opinion. If in doubt assess the comments against your community guidelines.

After banning a user, the circumstances can be logged in a report.

| Hate speech, including personal attacks, discrimination, prejudice and abuse, should be handled with care: 1. Opposing viewpoints in the form of comments on posts are not deleted unless they contain extreme ’hate speech’ 2. Our moderators engage with users who comment with toxic (disrespectful, unreasonable) language to encourage them to express their viewpoints in a positive, respectful manner 3. In the cases of extreme hate speech, comments are hidden or deleted, and the user is asked to follow community guidelines and engage respectfully. 4. Users who ignore the guidelines and persistently engage in hate speech will be blocked in the interest of inclusivity of the larger community. This is necessary to build and maintain a trusted online community where views can be expressed safely. |

The video below offers a summary of the ways in which you can handle negative comments:

How to acknowledge and reinforce respectful user practices

It is equally important to acknowledge users respectfully participating in the conversation and abiding by the community guidelines. Moderators can do this by thanking users for their contributions, liking their comments (or replies) and thus giving prominence to these comments in the thread, or replying to users in a positive manner.

How to deal with polarisation

It’s important to understand when you are encountering polarisation. Polarisation in its simplest form is: we are right, they are wrong. Polarisation is an artificial construction of identities. It’s about people who are being targeted by narrow identity communication to choose sides. Pushers try to lure them into polarisation. The definition of the problem and problem ownership is not very clear.

When dealing with polarised situations we have four options:

- Change the target audience. Pushers portray an enemy in the other pusher and target the middle ground. That’s where polarisation is intended. So, target the middle ground for depolarisation. This could take the form of ignoring extreme, polarised positions and looking and highlighting the opinions of the middle.

- Change the topic. Move away from the identity construct chosen by the pushers and start a conversation on the common concerns and interest of those in the middle ground. Apply the aspirational approach. Already do with our content, but let’s get the conversation back on the issues that all young people are experiencing – price of rice etc.

- Change position. Don’t act above the parties, in between the poles, but move towards the middle ground. Stop trying to build bridges (positioning above the poles) but rather to a position in the middle (connected and mediating).

- Change the tone. This is not about right or wrong or facts. Use mediating speech and try to engage and connect with the diverse middle ground. Moderators here should not moralise, nor ask who is guilty but should focus on the development of mediating speech and behaviour with a non-judgemental approach to moderation.

Moderation by representative teams

The content and editorial teams of the different Citizens’ Voice projects are diverse and include (where applicable) people of different genders, regions, races, religions, tribes and socio-economic classes.

As online moderation of discussions between users on the digital platforms is an important tool to stimulate inclusive dialogue, it is essential to ensure that the moderators and community managers of the platforms are also truly representative of the online communities in the countries they operate in.

Go to Assignment 7.2.2: Be the moderator.

Key takeaways

- Moderation is a tool to build an inclusive digital community

- Moderation is an important tool to communicate with users and bring marginalised groups into the conversations

- Moderation can be applied to create a safe space where young people are encouraged to think critically and engage in constructive discussion

- Moderating conversations is complex

- Different frameworks and grids can be support moderators in their daily work

- Comments can be categorised in: Supportive/constructive; Inquisitive; Negative/unconstructive; Antagonistic; Abusive/offensive. The response of the moderator can depend on these categories

- Extra attention should be paid to polarised situations and its response.