Moderation Strategy

What is moderation?

For most digital platforms, moderation refers to the practice of identifying and deleting content by applying a pre-determined set of rules and guidelines.

RNW Media implements ‘community moderation’, which aims to encourage young people to engage in respectful dialogue, allowing everyone to have a voice. Online abuse and harassment can discourage people from discussing important issues or expressing themselves and mean they give up on seeking different opinions. Therefore, discussions on our platforms are moderated with the aim of ensuring they remain a safe place for discussion and debate.

Go to Assignment 6.3: Why and how to moderate?

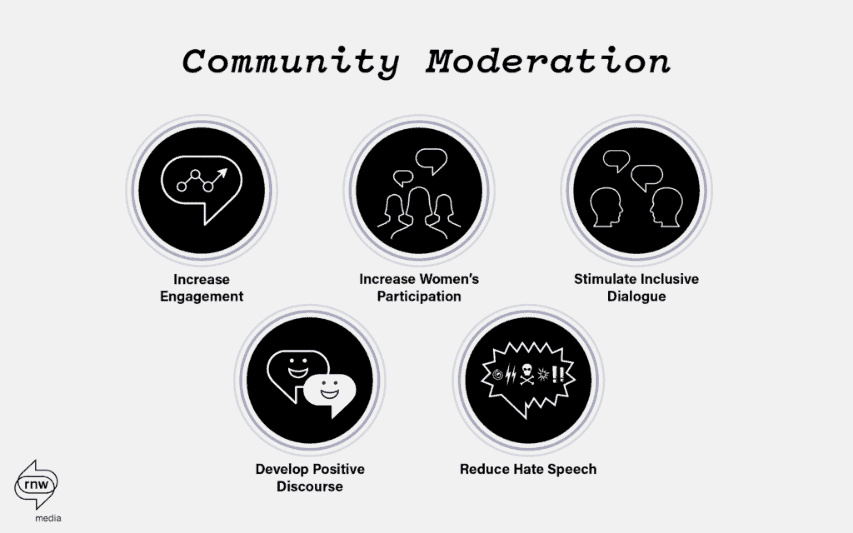

Community moderation objectives

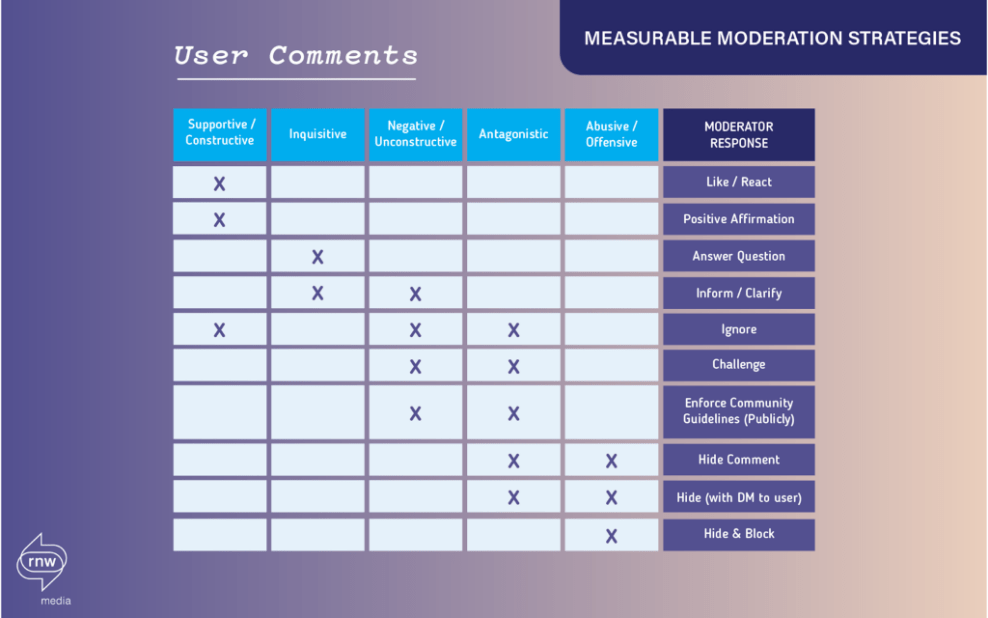

In order to reach these objectives, it is important to have a moderation strategy in place that guides your team in how to implement community moderation.

CV’s Moderation Strategy document outlines the different objectives of moderation, with the relevant goals, strategy and tactics. Per objective, the strategy outlines how moderators can measure the impact of their work and monitor the success of moderation, in relation to the set objectives. The different tactics are described in more detail in the Moderation Guidelines.

Even if you have your moderation strategy and guidelines in place, it is important to revise and update the document regularly. Are your tactics working? Is it leading to the desired engagement with your audience? Are your reaching your objectives with your audience?

| Citizens Voice moderation strategy – Does it work? How does interactive moderation in an online community affect user engagement? A research carried out in 2019-2020, covering 3 of our platforms (Yaga Burundi, Habari RDC, Benbere Mali), resulted in the following findings and recommendations. Findings More interaction and more thoughtful comments– commenters are twice as likely to comment with a thoughtful comment in a moderated conversation compared to an unmoderated version of the same post More unconstructive comments, but why? – Especially the case for sensitive subjects and possibly caused by more interactive discussion, leading to more thorough exchange of viewpoints, and therefore also to more unconstructive comments Imitation effect– commenters are likely to copy each other’s behaviour Men comment more thoughtful than women– and women were more likely to respond with a one- or two-word feedback comment Recommendations If the imitation effect, as witnessed in some discussions, is indeed affecting the way people respond, moderators should be particularly sharp in the first phase after a post has been published. Once the tone of the discussion is set, new comments are likely to follow the example of previous commenters. In the observed case on Habari it was a positive contribution, but how would the conversation have evolved if the first commenters said: “Shit article”? The fact that moderation seems to increase interaction and therefore might increase the likelihood of unconstructive comments, is a valuable insight for moderators. Although more in depth discussions are desirable on the platform, it also means that once a discussion is “on” moderators should frequently check whether the discussion stays constructive and respectful, and if needed steer away from polarisation. The finding that men are more likely to comment with a thoughtful comment and women more likely to respond with a feedback comment might indicate that women are more hesitant to express themselves, and therefore women might need a different approach. Perhaps women need some extra support or appreciation from the moderator when they share their thoughts. The findings of the research are based on user comments from the three Francophone African CV communities. Cultural background and the thematic focus of the online community potentially influences the effect of moderation on user engagement. It is recommended to replicate the experiments in other regions and for the Love Matters programme. It is important to discuss with the moderators their best practices, as it is likely that each project applies the moderation strategy somewhat in its own way. To illustrate, during the last international week, one of the moderators explained that she protects users when they provide information that is potentially harmful to themselves or contains too much personal information. This form of user protection would be valuable addition to the moderation strategy. |

Go to Assignment 6.4: Create your own moderation guidelines.